In recent days, some of the world’s largest tech companies released new transparency reports, opened up their content moderation guidelines, and adopted approaches to fighting pernicious content as they tried to head off government regulation amid concerns about “fake news,” harassment, terrorism and other ills proliferating on their platforms.

The moves come amid a backdrop of governments across Europe and in the U.S. discussing regulations and ways to combat problems that seem to flourish on social media, and journalists and outlets in authoritarian countries pushing back against requests for their accounts to be blocked or taken down in an attempt to silence them.

Staving the flow of fraudulent news and extremist content, combatting hate speech and propaganda, and improving the quality of information and communication has become central to maintaining a regime of self-regulation among tech firms. And how they respond will have profound effects on media around the world.

When Facebook released its revamped transparency report on May 15, it provided more granular information on content takedown requests, compliance, and turnaround time. And Twitter announced that it will look at the behavior of accounts in attempting to deal with online harassment and trolling. The announcements coincided with the first day of RightsCon–one of the world’s largest digital rights conferences–and followed the first YouTube-specific transparency report released by Google earlier this month.

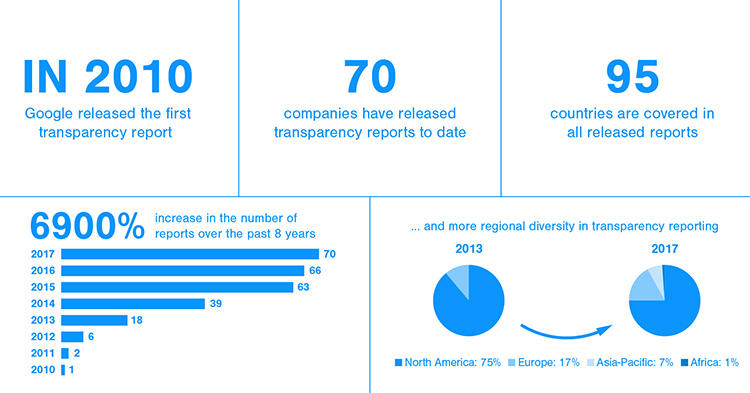

Moves to greater transparency from these large platforms was welcomed by rights activists. “These overdue but welcome steps lead the maturing movement of so-called transparency reporting, or regular releases of aggregate data on the ways companies impact privacy and freedom of expression online,” Peter Micek, general counsel at Access Now, a digital rights advocacy group that publishes the Transparency Reporting Index, told me at Rights Con

Facebook has released a lot more data including the rationale behind what content it removed, whether the content was flagged internally or externally, how many people saw it, and how fast the post was taken down. Facebook said its reviewers will now label the violation justifying the removal–a move that could prove helpful in understanding the impact of policies restricting graphic violence or terrorism content.

For example, in the first quarter of this year, Facebook’s report showed that it took action on 3.4 million pieces of content that violated its restrictions on graphic violence, ranging from content being removed or covered with a warning label. [A detailed definition of how Facebook defines graphic violence be found here.] Eighty-six percent of the content was identified by the company, and Facebook has attributed that 70 percent increase in content being identified since the prior quarter to improvements in its detection technology.

While providing this information is a welcome step, there is a long way to go in terms of accountability when it comes to content takedowns. Especially since there are myriad examples of social media platforms removing journalistic content only to reinstate it when, and if, an uproar occurs.

The day before Facebook released its transparency report, Dawn–one of Pakistan’s leading English-language outlets–reported that Facebook had blocked a 2017 post about judicial activism that Dawn posted to the platform. Facebook later reinstated the post, explaining that it was blocked erroneously, the paper reported.

Such occurrences are all too common for media. In January, the Facebook page for TeleSur, a Venezuela-based public news organization, disappeared for a 24-hour period in what the social media platform referred to as “an internal mistake,” Newsweek reported. Several Palestinian media organizations and journalists whose accounts were suspended, had them reinstated following a social media campaign “#FBcensorsorsPalestine” and questions from other media organizations. “The pages were removed in error and restored as soon as we were able to investigate,” a Facebook spokesperson was cited as saying in The Verge. Tens of thousands of videos removed from the YouTube accounts of Syrian media were also eventually reinstated following worldwide criticism, according to reports.

Zayna Aston, a communications and public affairs official for Google and YouTube, said via email that when it comes to videos with graphic content, “Context is very important.” Aston added, “That’s why we published an updated Help Center article with dos and don’ts for uploading graphic violence and content related to terrorism on YouTube.”

With enough outcry over the validity of removed content, it is possible to have it reinstated. But what about the media outlets and journalists who don’t have the networks, the language, or the wherewithal to prompt a global backlash?

Neither the YouTube nor the Facebook transparency reports provide insight into the error rate. And there remains no way to independently review or audit takedowns to determine how many journalists or media outlets are affected.

A spokesperson for Facebook said the company may explore measuring its recently-launched content appeals process as a way to reflect error rate. A spokesperson for Google said via email that the company’s report was a “first step,” adding, “We’ll include metrics for other violation types in future reports as we improve how we measure them.”

Although Twitter is smaller than Facebook or Google, it has come to play a central role in the media ecosystem. But many journalists, particularly women and minorities, must deal with online trolling and harassment as a daily part of their jobs, leading some to withdraw from Twitter, social media, and, in some cases, even journalism, CPJ has found.

Twitter has struggled with how to address content that does not technically violate its Terms of Service but detracts from what it terms “healthy” conversations. By reframing problematic content as “behavior” instead of “speech” and reducing the visibility of such content, the company might be able to avoid being accused of censorship as it seeks to combat harassment.

“The signals we are using to do this work in product are based upon the behavior and conduct of an account, it’s not based on the content of tweets, it’s not based on opinion or perspective, but on the conduct of the account’s behavior,” Colin Crowell, Twitter’s head of policy, said.

At a RightsCon panel co-organized by CPJ that focused on finding solutions to these issues, this approach seemed to have tentative support from participants working on issues related to online violence and harassment against women and journalists. But more will need to be done.

[Reporting from Toronto, Canada]